The bard ponders #NoEstimates with some real data

Chris Verhoef and I decided to look for some real project data to explore the value of software estimates and #NoEstimates. We have submitted our research as an article to IEEE for peer review. The complete paper can be found here, and some highly related materials on our prior and ongoing estimation research (M.U.S.E.) can be found here. The following is a summary of the paper.

Abstract: A common approach in agile projects is to use story points, velocity and burnup charts to provide a means for predicting release date or project scope. Another approach that is proposed is to abandon story point estimation and just count stories using a similar burnup chart. We analyzed project data from 55 projects claiming to use agile methods to investigate the predictive value of story point estimation and velocity for project forecasts. The data came from nine organizations ranging from startups to large multinational enterprises. We found that projections based on throughput (story counts) were essentially identical to that of using velocity (story points). Neither velocity nor throughput were great predictors as the uncertainty bands were rather large. Through the use of a simulation model we replicated our findings which aid in understanding the boundary conditions for when story point estimates may be better predictors.

Key Findings

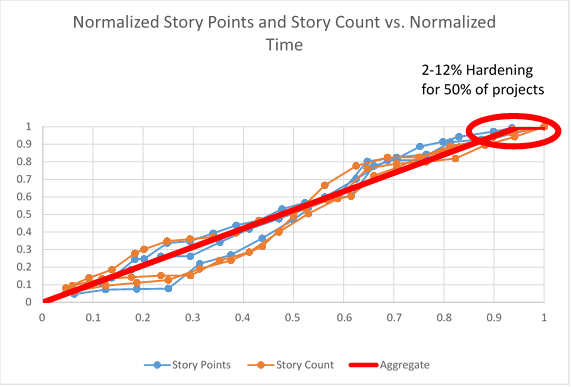

The first thing we noticed was that the normalized burnup charts for Story Points (Velocity) and Story Count (Throughput) were nearly identical. One interesting thing we also found was that roughly 50% of the projects plateaued and required from 2-12% of the total time to finally conclude. We associate that with a probable hardening and/or release readiness period. It is useful to recognize this so that teams can plan accordingly.

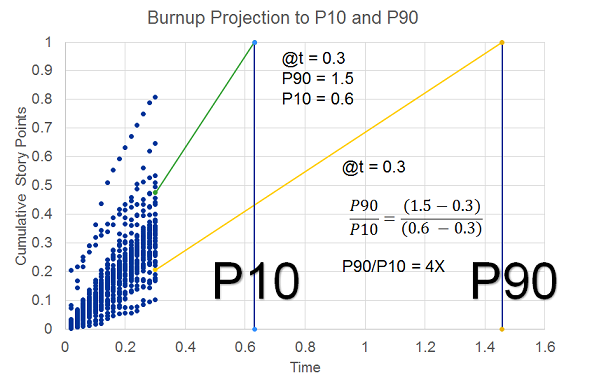

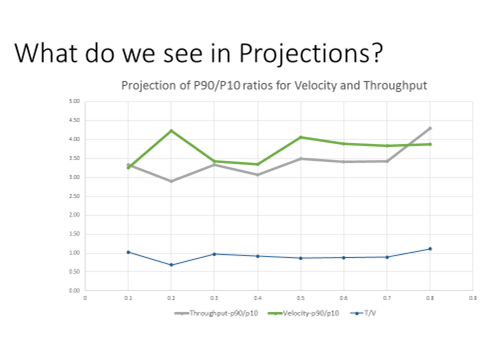

So the next thing we looked at was the projection of release date based on the remaining story points using average velocity, or story count using average throughput. To compare the approaches we looked at the P90/P10 ratio as an indicator of range. This ratio is commonly used in domains where the range of the distribution is rather large. The P90 is the 90th percentile and P10 is the 10th percentile. Some domains where it is used frequently are wealth distributions and oil and gas exploration. As a concrete example, the P90/P10 ratio for income inequality is, roughly speaking, the ratio of what professionals like doctors and lawyers earn to what cleaners and fast food workers earn. One of the nice attributes of the P90/P10 ratio is that it uniquely describes the distribution shape for either a lognormal or Weibull distribution.

At each iteration we made projections based on the remaining work using the average velocity and throughput. This is a standard approach in agile projects and is mathematically identical to extrapolation using traditional project management Earned Value Management (EVM). We compare these projections against the known actuals to determine the relative errors. The figure below shows the burnup chart for all projects plotted on the same axis scale. In this particular case we have data through t=0.3. Then using the velocity thus far we project out the anticipated release date. The error in the projection is the delta from 1.0. On this same figure we show the P90 and the P10 projections. To determine the actual error bands we subtract out the current projection time (t=0.3). For this chart (with approximations to simplify the math) we have P90 of 1.5, and P10 of 0.6. The revised P90 and P10 are 1.2 and 0.3 respectively, which gives a P90/P10 ratio of 4.0.

What we discovered was that there was almost no difference in the accuracy band of the velocity projections relative to the throughput projections. In fact the throughput projections were marginally better than the velocity projections. In both cases we found that the accuracy of the projections did not improve as a function of time, consistent with our prior findings about the Cone of Uncertainty [Little] [Eveleens and Verhoef]. Neither velocity nor throughput are particularly good predictors, however, with a P90/P10 ratio on the order of 3.5. In practical terms, whether using velocity or throughput, if a team forecasts that they have about 6 months remaining, the P10 to P90 bands for 80% confidence are roughly 3.2 to 11.2 months. This does not bode well for teams or stakeholders that are expecting estimates that are commitments or even within 25% accurate.

In addition to looking at the raw data, we built a Monte Carlo simulation to explore the impact of Story Point Distribution, Estimation Accuracy, Hardening time, and Estimation Bucketing Approach. We started with the empirical curves from the data and also tried lognormal and Weibull curve fits. The data showed a correlation between Story Points and velocity which we also incorporated in the simulation. We were able to achieve a very good match to the data with overall P90/P10 ratios near 3.5. Once we had this base we were able to run some sensitivity analysis to see what situations resulted in velocity providing improved projections. The simulations showed throughput projections to be essentially identical to velocity projections. One might think that improved estimation accuracy would favor velocity. While velocity projections are improved, the throughput projections are equally improved. The only time we found an advantage to using velocity was when the range of story point distribution is very large. This is good news as the team has a lot to say about how they split their stories so as to reduce the range of the story distribution.

Based on these findings, we made some observations about the implications on critical decisions that teams and organizations make.

| Decisions to steer towards the release | We observed from both the data and the simulations that story point estimates provide minimal improvement in forecasting compared to using throughput for typically observed estimation accuracy. If story point estimates are very accurate (unlikely) then they may provide value. The simulations showed that estimates may also help when there is a large range of story distribution, although an alternative approach would be to split large stories so that the overall distribution is not large. When estimating a container of mixed nuts, we don’t really care too much whether we have smaller peanuts or larger brazil nuts, but we do want to spot any coconuts! |

| Decisions to help with managing iterations | Many teams use detailed task estimation to help them manage their iterations. We did not have access to task estimations for this study, however the findings with story points should be very enlightening. Task estimation and tracking can often be a very time consuming activity. Teams should look at how much value they are getting from these estimations. |

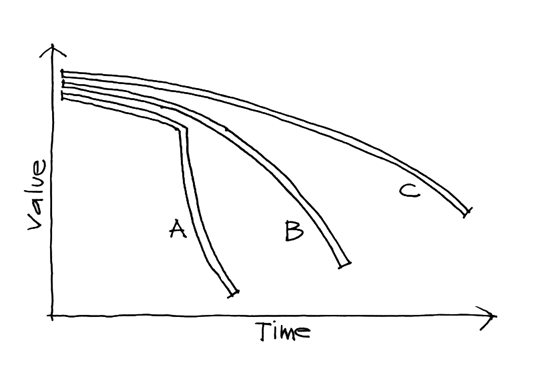

| Decisions at project sanction | Some level of macro-estimation of costs and benefits is likely necessary for business decisions. If the benefits are so overwhelming that it should be done at any cost, then it could be wasteful to spend time on estimating something that does not impact the decision. In general it is waste to spend more time on cost estimation than on benefits. In fact, a study of a number of projects at a major organization found that value generated was negatively correlated to cost forecast accuracy. Too much emphasis on cost or on reduction of uncertainty can destroy forecasting accuracy of value predictions. |

When estimating a container of mixed nuts, we don’t really care too much whether we have smaller peanuts or larger brazil nuts, but we do want to spot any coconuts!

Practitioner’s Guide

Velocity vs. Throughput

With the typically observed story point estimate accuracy range, our results show there is minimal added value to using velocity over using throughput for estimating purposes. When story size distribution is very large, then velocity has better predictive power than throughput.

Hardening

In about half the projects there was a period at the end of the project of between 2-12% of the overall timeline with zero velocity, most likely for release and hardening activities. Unless teams have reasons to believe that they will not require such activities, we recommend either allocating a corresponding time buffer or adding stories (and story points if used) for such activities.

Estimation Accuracy

This study provides additional confirmation that the range of uncertainty with software estimation accuracy is significant and we can confidently say that this range of uncertainty is much larger than many decision makers realize. An interesting finding was that improvements in estimation accuracy helped throughput projections just as much as velocity projections. So while improving estimation accuracy may be a noble goal it is not a reason to favor velocity over throughput.

Bucketing of Estimates

While there was some degradation of the predictive power of velocity as buckets get very large, the overall impact is still very small. Since bucketing approaches are used for expediting estimation processes this finding suggests that teams may continue to use them should they find value in estimating at all. However, we have seen situations where religious adherence to bucketing approaches slowed down or distorted the estimation process and in those circumstances teams may be better suited with simpler approaches. Bucketing or #NoBucketing? You decide.

Uncertainty over Time

Perhaps a bit more bad news for teams and decision makers is that it doesn’t get better over time. The range of relative uncertainty of the work left to be done is large and stays large over time, which is consistent with other findings regarding the Core of Uncertainty.

Decisions

Decisions are being made at multiple levels. For some decisions there may be value to estimates of stories or story points. But those estimates most likely have very large uncertainty ranges. The important question for the team is to understand the decisions they care about, and to comprehend the range of uncertainty to make the appropriate decisions. Decision makers would be wise to learn more about making decisions under uncertainty. There is significant research in many other industries (e.g oil and gas exploration, financial institutions, actuaries, etc.)

Estimates or #NoEstimates

To paraphrase Polonius’ advice to Laertes,

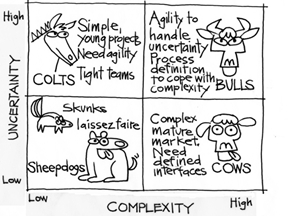

Complexity includes project composition such as team size, geographic distribution and team maturity.

Complexity includes project composition such as team size, geographic distribution and team maturity.